Ente is an end-to-end encrypted cloud for your photos.

While end-to-end encryption guarantees privacy, it also guarantees that our servers cannot run algorithms to derive meaning out of your photos, because we do not have access to your photos.

For Ente to be useful as a photos app, it has to make rediscovering memories simple. Now to run algorithms over your photos, all the computation must be done on the edge. This has been a novel, hard problem to solve.

This post is an overview of how we're delivering semantic search, while preserving your privacy.

Try it out on our desktop app here.

The Model - CLIP

CLIP (Contrastive Language-Image Pre-Training) is the most widely used multi-modal neural network trained on a variety of image-text pairs to learn information across them.

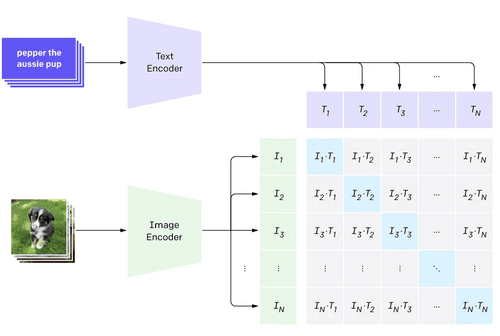

Architecturally, the model consists of two encoders, one for each image and text which produces an output, embedding vector, that is a representation of the information content present in them as inferred by the model.

The training routine for the model is as follows, “Given an image predict which out of a set of 32,768 randomly sampled text snippets, was actually paired with it in our dataset” 1. This particular method forces the model to extract valuable features out of the image to match the textual content as it was penalised in the loss function otherwise.

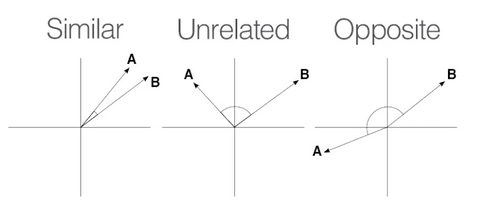

The “Contrastive” term in CLIP is the method of aligning vector representations of the ground truth pair while diverging all the other combinations using a contrastive loss function.

Given two embeddings A and B we say that the two vectors are “similar” when

the angle included in between them is small or the cosine distance between them

is high.

Example

Imagine the embedding dimension is 3 and the text embeddings for the three

words are given below. The cosine similarity of the (cat, tiger) pair is

greater than (dog, tiger) indicating that a cat and tiger is much closer

in meaning than a dog and tiger to the model.

cat: [0.25, 0.5, 0.25]

dog: [0.5, 0.1, 0.4]

tiger: [0.0, 0.9, 0.1]

# Cosine score calculation (ignoring the normalisation factor for simplicity)

cosine(cat, tiger) = (0.25*0.0 + 0.5*0.9 + 0.25*0.1) = 0.475

cosine(dog, tiger) = (0.5*0.0 + 0.1*0.9 + 0.4*0.1) = 0.130In summary the CLIP model learns an embedding space by jointly training the text and image encoders to maximise the cosine similarity between the two embeddings.

Great, so we now have a model that can tie photos to text queries. But since we don't have access to customer data, how do we run inference on the edge?

The Framework - GGML

GGML is a tensor framework for running deep neural networks, mostly for inference without any additional runtime written completely in C. The llama.cpp and whisper.cpp projects are powered by GGML and acts as a guide for running complex models on the edge.

Since GGML does not allocate memory during runtime, the model graph is built during execution with the model weights read from the model GGUF, which is just a file format that GGML understands. Finally for CLIP, we ended building on top of the GGML implementation.

The other alternatives for running ML models on the edge are PyTorch Mobile, TFLite and ONNX. Pytorch and Tensorflow are mature ML frameworks that power the cutting edge of research in the field. Their counterparts in the edge are coming along very well with support for different architectures.

One of the reasons we wanted to try GGML is that since we can only run the AI models on the personal devices of users, we wanted a thin layer that can be shipped across platforms, without a runtime.

Looking forward

We are excited to bring semantic search across all platforms and are currently testing out the implementation on Android and iOS. The sheer number of platforms and instruction sets is posing a challenge, but we'll get there 💪

Another feature of GGML is the support for quantisation (upto 4-bit), which is the technique for performing computations at lower precision, reducing the compute and storage requirements.

Since compute on the edge is expensive, we are exploring quantised models to draw the divide between convenience and usability.

This exercise of deploying Machine Learning on the edge has solidified our application stack for storing, syncing, and searching embeddings; end-to-end encrypted.

Going ahead we have the flexibility to experiment with different frameworks and models to enable rediscovery in a meaningful way.

The ecosystem for running sophisticated AI models on-device is still maturing, and the work by the open-source community is something to look upto. Huge shoutout to the GGML project and the contributors of clip.cpp 🙏

We are excited to be building during this time.

If you'd like to hang out with a bunch of folks working on cutting Edge ML, join us on Discord / Matrix.