At Ente, we're building an end-to-end encrypted alternative to Google Photos.

Delivering reliable storage was the first problem.

Delivering discoverability over encrypted data has been the larger challenge.

The only way to provide a search experience that is comparable to Google Photos is by learning from your data at the only place it is accessible - your device.

Executing machine learning on the edge is fun. Given the constrained environments Ente runs on (mobile phones), every step is non-trivial - from running inference, to storing the computed embeddings, to executing search over these embeddings.

In this article I'll share how we arrived at an efficient storage layer for embeddings, to run semantic search with CLIP on mobile.

Embeddings

CLIP's image encoder returns a floating point array of 512 items for every photo you send to it. These are embeddings.

We now have to store these on disk, and execute a similarity search against the embedding corresponding to the user's search query.

While one can argue that Vector DBs have peaked, as of writing this post there are none that run out of the box on mobile devices. We're porting one, but that story is for another day.

On the brighter side of things, the number of embeddings we've to query over is small. An average user has < 100,000 photos, and running a brute force search over 100,000 items on midrange mobile devices takes < 500ms.

List<Photo> semanticSearch(String query) {

List<double> textEmbedding = getEmbedding(query);

List<Photo> results = [];

for (final photo in photos) {

double score = cosineScore(photo.embedding, textEmbedding);

if (score > THRESHOLD) {

results.add(photo);

}

}

return results;

}

double cosineScore(List<double> imageEmbedding, List<double> textEmbedding) {

double score = 0;

for (int i = 0; i < imageEmbedding.length; i++) {

score += imageEmbedding[i] * textEmbedding[i];

}

return score;

}500ms is an acceptable latency for a search that runs offline without network calls.

Now all we have to do is pick a database to store all embeddings.

Databases

File

Like every self-respecting engineer, we first considered piping the raw data in to a file.

But since we store computed embeddings (end-to-end encrypted) on our servers, we would need clean abstractions to find those that are yet to be synced and to modify those that have been updated on a different device.

It made little sense to reinvent the wheel.

SQLite

So the starting point was SQLite - the darling of edge databases.

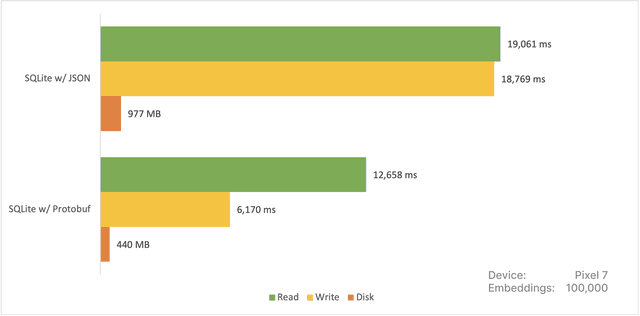

What I thought would be a short fairytale, did not have a happy ending. Writing and reading 100,000 serialized embeddings took ~19 seconds.

Writes are infrequent, so not a concern.

Reads are critical. You cannot search until all embeddings have been loaded.

Also, the database was taking up almost 1 GB of disk space.

It was clear that serialization was the culprit, so we switched to Protobuf instead of stringifying the embeddings. This resulted in considerable gains.

For 100,000 embeddings, writing took 6.2 seconds, reading 12.6 seconds and the disk space consumed dropped to 440 MB.

Not bad, but not good.

Update

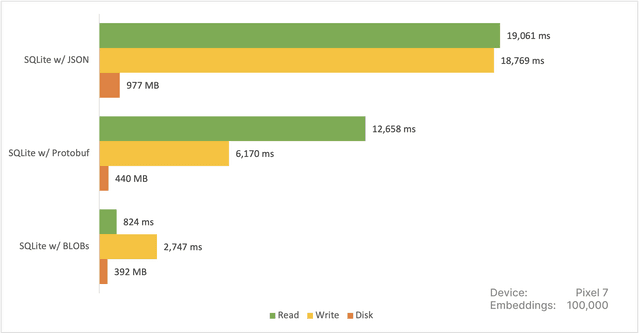

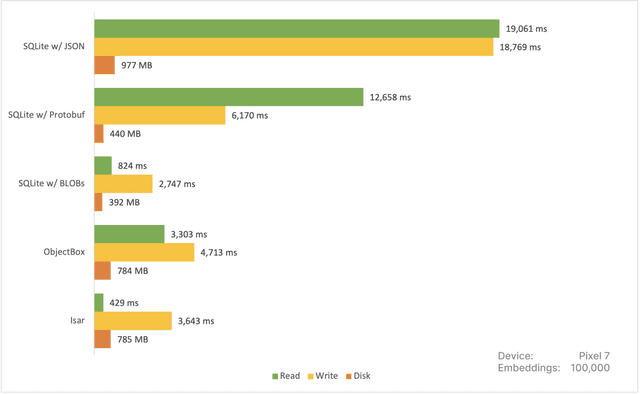

At this point, we moved on from SQLite, benchmarked more databases and submited our findings to HackerNews. This is where we were informed that using Protobuf was an overhead that could be short circuited by converting the array directly to its byte representation.

Shout out to lovasoa, the maintainer of sql.js for educating us. Implementing their recommendation resulted in major improvements.

Now this is the SQLite we knew and loved!

We were unaware of this optimization at the point of drafting this post, and this ignorance led us to explore more databases. Over all this turned out great, because we ended up discovering a tool that was a better fit for our use case.

ObjectBox

We next checked out ObjectBox - from the makers of EventBus.

Their documentation was lovely and integration a breeze.

Writes took 3.3s, reads took 4.7s, and the database consumed 782 MB of space. This was a significant improvement.

Before we could celebrate, we found out that ObjectBox was not open source. This wasn't immediately obvious from their repos, we should have dug deeper.

We have no right to complain, greenrobot has made massive contributions to open source, and we're nothing but grateful.

With Ente we have a strict policy of not bundling closed sourced dependencies, so we moved on.

Isar

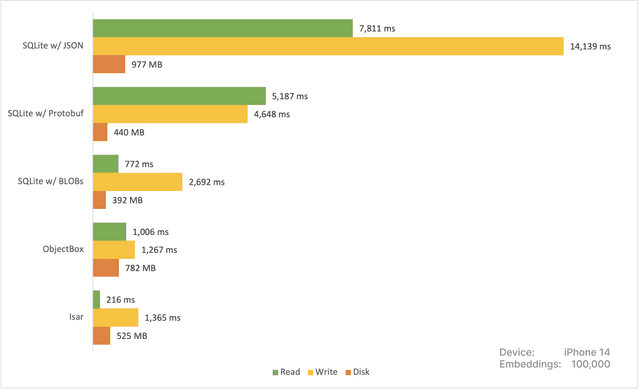

Enter the hero of our story - Isar - an open source (Apache 2.0 licensed), NoSQL database with ACID semantics, built for Flutter.

Integration was again a delight. A few annotations here, a few queries there, and we were rolling.

Writing 100,000 embeddings took 3.6 seconds, and reading under 500ms!

Isar's data format being very close to in-memory representation was making deserialization cheap for our floating point arrays.

Also, Isar was able to pack the data into 785 MB on Android, and 525 MB on iOS.

We had found our database! :)

Summary

Android

iOS

As much as we love SQLite, serialization and deserialization was turning out to be relatively more expensive. Parallelizing this step on the application layer would have helped but this felt like unnecessary complexity since Isar fit like a glove.

For future features (face recognition and clustering) we will need a vector DB. For now a dumb in-memory search will do the job, and Isar has proven itself to be the best candidate.

Thank you for reading this far! If you'd like a live feed of us battling machine learning on the edge, join our Discord!